AI4TRUST Pilot – Frequently Asked Questions (FAQ)

- What is the AI4TRUST project?

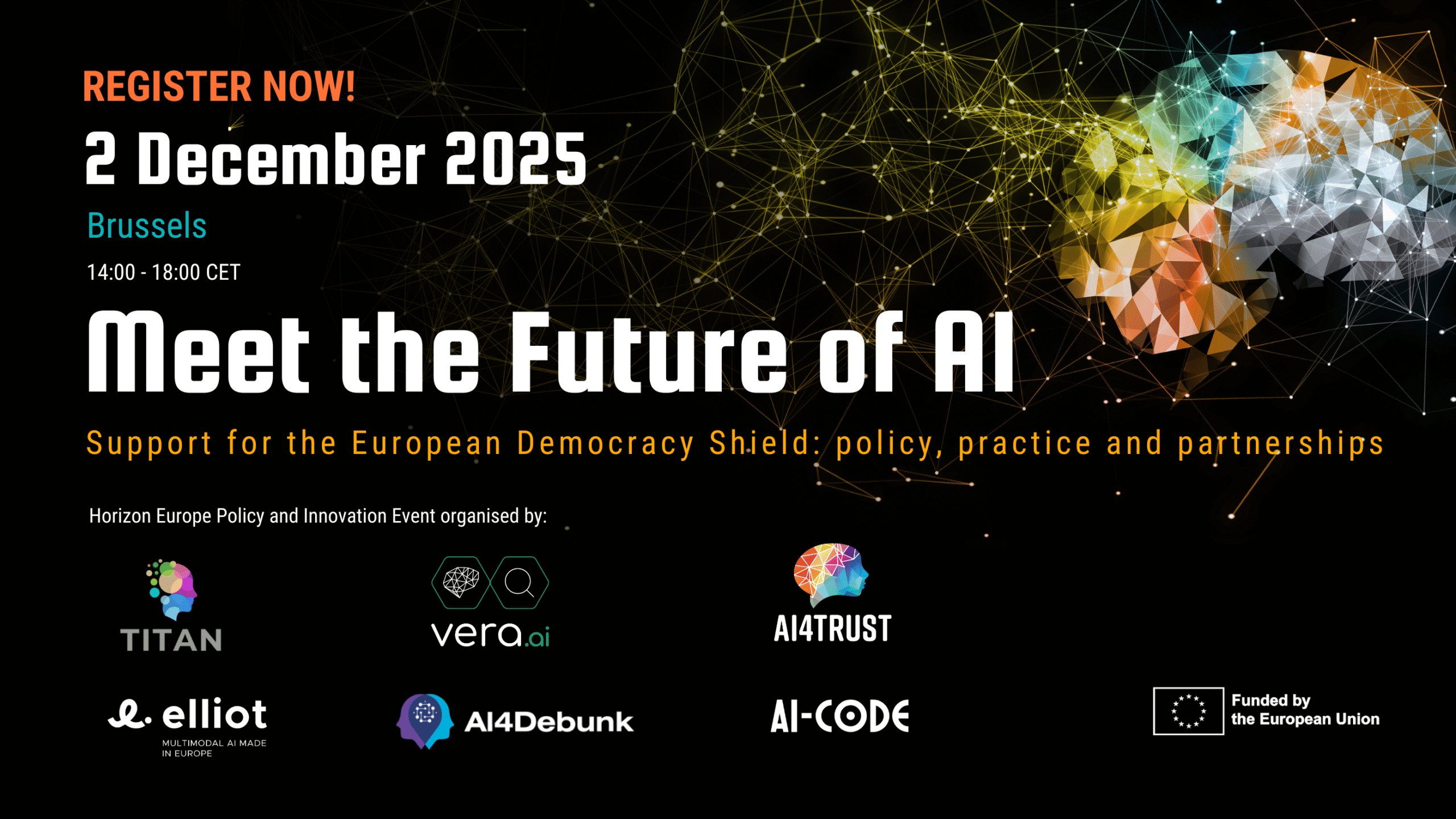

AI4TRUST is a European research and innovation project funded by the European Union’s Horizon Europe programme (AI to fight disinformation, Grant Agreement No. 101070190).

It brings together media organisations, research institutions, and technology partners from across Europe to develop ethical and trustworthy AI tools that support professionals in identifying, analysing, and responding to disinformation.

- What is the AI4TRUST Platform MVP?

The AI4TRUST Platform MVP (Minimum Viable Product) is the first prototype of a collaborative environment that combines AI-driven analysis with human expertise to combat disinformation. It integrates multiple tools designed to help professionals monitor and detect disinformation signals, verify content, and understand online information dynamics.

- What is the goal of the pilot phase?

The pilot phase aims to test the AI4TRUST Platform with real users — journalists, fact-checkers, researchers, and policymakers — and gather their feedback on usability, accuracy, and usefulness of the tools developed for the platform.

This feedback will guide the improvement of the platform before its final release.

- Who can participate?

The pilot is open to:

- Journalists, especially those working in verification or newsroom innovation.

- Fact-checkers and media professionals focused on combating disinformation.

- Researchers studying information ecosystems, AI, or media.

- Policy makers involved in digital governance, media regulation, or media literacy.

Participation is open to professionals based in the European Union and associated countries.

- What will participants do?

Participants will:

- Register through the online form.

- Test specific tools from the AI4TRUST Platform according to their professional profile.

- Provide feedback through a short online questionnaire.

- Optionally, join a focus group or workshop to share their experience in more detail.

- How long will participation take?

Testing time varies depending on the participant’s profile:

- Journalists: about 2–2.5 hours

- Fact-checkers: about 3–3.5 hours

- Researchers / Policymakers: about 1–1.5 hours

Testing can be completed flexibly over several days.

- Do participants need technical expertise?

No. The AI4TRUST Platform is designed for media and policy professionals, not developers.

You don’t need coding or AI experience — only familiarity with the challenges of detecting and analysing disinformation.

- What happens with the feedback collected?

All feedback will be analysed by the AI4TRUST consortium to improve the platform’s and tools design, usability, and reliability.

Responses will remain confidential, and all data will be handled in compliance with the EU’s General Data Protection Regulation (GDPR).

- Is there any compensation for participation?

There is no financial compensation, but participants will gain early insight into the development of European AI tools against disinformation and the opportunity to help shape their design and impact.

- How can I join the pilot?

Simply fill in the registration form below:

You will receive an email with instructions and access details once the testing phase begins.

- Who can I contact for questions?

For any questions about participation or the AI4TRUST project, please see the contacts here.

- Who are the partners of the AI4TRUST Consortium?

The AI4TRUST consortium counts on a highly competent transnational team consisting of 7 Research / Academic organisations, 3 highly dynamic industrial partners, 4 internationally active media professional partners/fact checkers organisations and 3 media networks for a total of 17 partners from 9 EU countries, 1 Associated Country (UK) and 1 Non-Associated Third Country (CH). You can read more about the consortium here.